LM Studio: The AI Powerhouse for Running LLMs Locally - Completely Free and Open-source

Table of Content

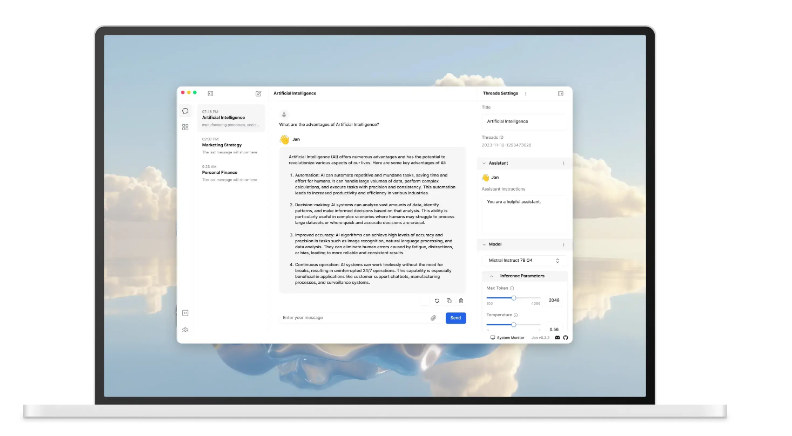

If you’re diving into the world of local AI models and want a robust, easy-to-use platform to run them, LM Studio is your new best friend. It offers a streamlined way to download, manage, and run large language models (LLMs) like Llama right on your desktop.

Whether you’re a developer, AI enthusiast, or someone curious about running AI without cloud dependence, LM Studio packs a punch with its intuitive interface and REST API for backend integration.

Let’s break down what LM Studio offers, why it matters, and the platforms it supports.

What is LM Studio?

LM Studio is a free, open-source application designed to help you run large language models locally on your machine. No cloud, no subscriptions, no middlemen. It simplifies the process of downloading models like Llama, setting them up, and interacting with them through an easy-to-use GUI and backend REST API.

In an era where data privacy is a growing concern, LM Studio empowers you to keep AI processing on your local machine. Whether you’re experimenting with prompts, developing apps, or automating tasks with AI, LM Studio gives you full control.

Key Features

- Built-in Model Downloads: Easily download and manage popular LLMs like Llama 2, Mistral, and others.

- Local Inference: Run AI models locally on your hardware without the need for internet access.

- Backend REST API: LM Studio provides a REST API, making it easy for developers to integrate local AI models into their applications.

- Easy-to-Use GUI: The interface is straightforward, making it simple for both beginners and experienced users to navigate.

- Performance Monitoring: Track model performance and system resource usage in real-time.

Supported Platforms

LM Studio is available for the most common desktop operating systems, ensuring you can get started regardless of your platform of choice:

- macOS (Apple Silicon & Intel)

- Windows (x64)

- Linux (x64)

Included LLMs

LM Studio supports direct downloads of several popular open-source large language models.

Some of the notable ones include:

- Llama 2 by Meta

- Mistral models

- OpenChat models

- TinyLlama

You can quickly add these models to your library and run them with just a few clicks. No need to manually fiddle with model files or dependencies.

Developer-Friendly REST API

For developers looking to integrate AI into their workflows or applications, LM Studio’s backend REST API is a dream come true. With the API, you can send prompts to your locally running models and receive responses programmatically. This opens up endless possibilities, such as:

- Integrating AI into chatbots

- Creating content generation tools

- Enhancing automation workflows with AI responses

- Using AI for local data analysis

Example API Request

Here’s a simple curl request to interact with your locally running LLM via LM Studio:

curl -X POST http://localhost:8000/v1/completions \

-H "Content-Type: application/json" \

-d '{"prompt": "What is the capital of France?", "max_tokens": 50}'

The response will give you the AI’s output without ever leaving your machine.

Why Use LM Studio?

- Privacy-Friendly: No data leaves your computer. Perfect for sensitive or confidential tasks.

- Offline Capability: Run AI models without an internet connection.

- Cost-Effective: No recurring fees. LM Studio is free and open-source.

- Fast and Reliable: No latency issues that come with cloud APIs.

- Flexible for Developers: Seamless backend integration with its REST API.

Final Words

LM Studio is a powerful, free tool that makes local AI accessible and practical. If you’re tired of cloud-based models and want more control over your AI workflows, LM Studio is worth exploring. It’s simple enough for beginners, yet robust enough for developers looking to integrate AI into their apps.

Ready to give it a try?

Download LM Studio and experience the future of local AI:

- Official Website: lmstudio.ai

- GitHub Repository: github.com/lmstudio-ai

And if you’re interested in more ways to supercharge your automation, check out these related articles:

Local AI is here. Embrace it.